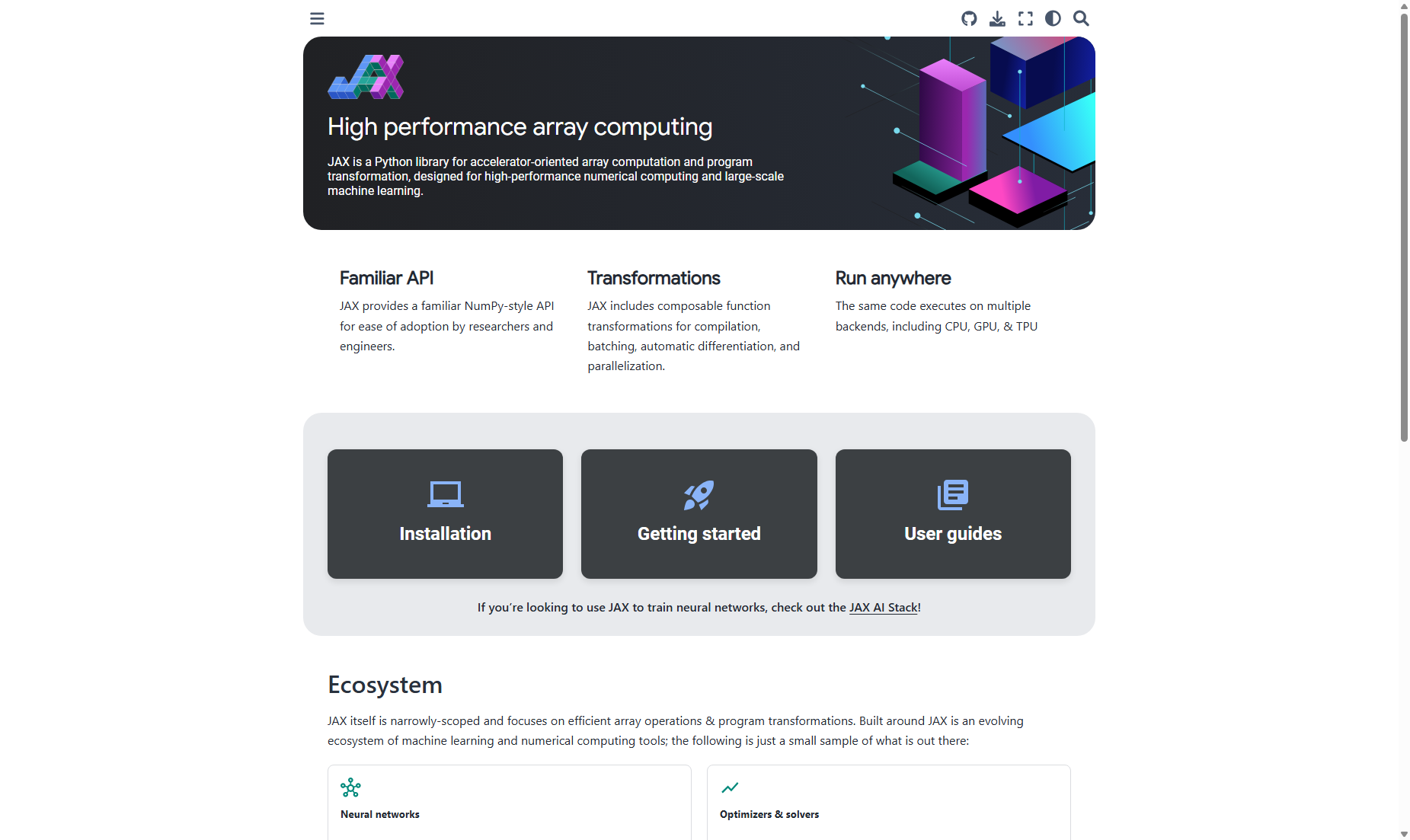

JAX Documentation

JAX is a library for high-performance numerical computing with features like JIT compilation and automatic differentiation.

标签:AI 开发平台AI Automatic Differentiation GPU Performance JIT Compilation Software Development 开发者工具 数字化身 机器学习JAX: High Performance Array Computing

JAX is a high-performance library for numerical computing, offering an efficient way to work with array-based operations. It's especially tailored for tasks that require extensive computation over large data arrays, making it suitable for scientific computing and machine learning applications. JAX provides users with capabilities such as Just-In-Time (JIT) compilation, automatic differentiation, and seamless integration with GPU and TPU for accelerated processing.

Features and Functionalities

-

Just-In-Time Compilation: JAX can convert Python functions into high-performance, compiled code optimized for execution speed. This is particularly useful for repetitive computations and can significantly reduce runtime.

-

Automatic Vectorization: Effortlessly apply functions across large datasets without explicit loops, enabling concise and efficient code.

-

Automatic Differentiation: Leveraging automatic differentiation, JAX can compute gradients of complex functions which are a vital aspect in machine learning for optimizing neural networks.

-

Parallel Programming: It supports parallel operations, enabling efficient computation across multiple devices, crucial for distributed computing tasks.

-

Integration with Pytrees: JAX provides utilities for organizing and manipulating nested Python tree structures, often used to manage complex data flows.

-

Advanced Debugging Tools: It includes comprehensive debugging tools to assist with the development process, including runtime value debugging and profiling computation.

-

Interoperation with TensorFlow: JAX can interoperate with TensorFlow, allowing users to leverage the extensive TensorFlow ecosystem when necessary.

-

Pallas Kernel Language: JAX offers the Pallas kernel language for writing custom kernels, particularly on TPU and Mosaic GPUs, enabling low-level performance optimizations.

Overview of Documentation Sections

-

Getting Started & Installation: Begin using JAX with guidance on setup and initial configurations.

-

Key Concepts: Core principles and methodologies to understand JAX operations and functionalities.

-

Tutorials: In-depth tutorials covering basic to advanced topics, making it easier for users to apply JAX concepts practically.

-

Guides & Resources: Includes user guides, profiling tips, and memory management techniques, especially for devices like GPUs.

-

Advanced Topics: Detailed sections on advanced differentiation, stateful computations, control flow, and logical operators are provided, aiming to equip users with comprehensive knowledge for handling complex computational tasks.

JAX stands out as a powerful library by abstracting complex computation into simplified operations which are both efficient and scalable, making it an excellent tool for developers in scientific and machine learning fields.

Summary: JAX is a library for high-performance numerical computing on large arrays, emphasizing JIT compilation, automatic differentiation, and GPU/TPU acceleration, ideally suited for scientific and machine learning applications.